Vishal Joshi, Head of Technology – Reference Data Services

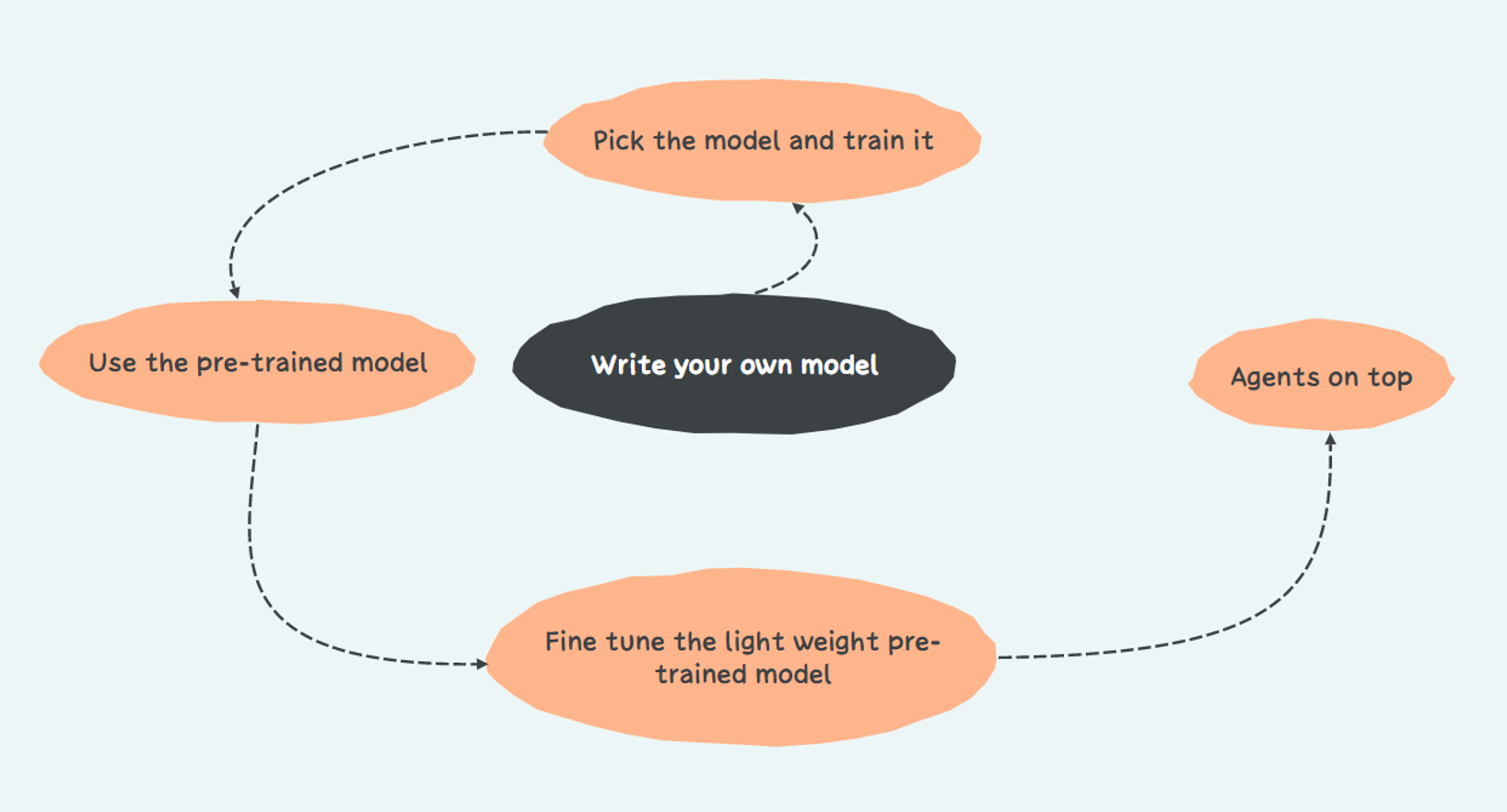

AI advancements in recent times have been fascinating. The evolution from “writing your own model” to “picking an existing model and training” to “using the pre-trained LLM models” to “fine tuning the open-source models for your needs” is nothing but an exciting visual of a virtuous cycle. I am very excited to see where it takes us, but for now let’s take a ride and learn from the advancements and create some business value.

Imagine a very simple workflow where you must deal with unstructured data and turn that into a normalized JSON structure for further processing. In the traditional solution, one would build tools to enable a user to fill in data points after reading and assimilating the content every time. But what if that must happen a million times a day? I don’t need to say much.

The same workflow can be built with AI interventions, pick any of the tricks from the above virtuous cycle and you will have a better performing and scalable system. Wrap this AI tool with an agent (ah! that’s altogether another gem) and expose the agent through a user interface or APIs, you have now given power to the business users to adjust the system as per changing inputs. The added intrinsic value of this system is the reduced time to value. The data gets to the consumer faster.

Some “twinkle in eyes moments” while treading this path, the new lightweight Llama models e.g., Meta-Llama-3.1-8B. This is neat, what Meta has done with the world of LLMs and DeepSeek too. As the name suggests, it’s a pre-trained lightweight model that one can fine tune it for a specific use case. This gives you more control in terms of security and cost (more control does not always necessarily mean less cost). And the fact that it is lightweight, it’s potential to run AI on edge devices is worth exploring, long way to go. More on internals of this later, may be another blog.

While all of this sounds like a charm, the key things we need to be mindful of are:

- Spend time in fine tuning, prompting the system instead of building and tweaking the system every time there is a change. The key is to treat the system as how you would instruct yourself and see the magic.

- What they say about AI, it will certainly go wrong where you have entrusted, maybe at least, just once in its lifetime. And as business owners, we must ensure that we have put in place the right checks and balances and tweaked our processes to catch the fall instead of doing the same thing repeatedly. That sounds exciting and AI is now making us do more complex and fun tasks instead of the mundane ones.

- Be aware of the costs, and there are hidden costs too (Not knowing the consequences of your actions is a crime!). Especially while using in-context LLMs like GPTs. But the good news is that you can do optimization on how you interact with LLMs, be it input/output tokens or API calls.

Just as a parting note, I want to suggest some food for thought – this popped up during a recent conversation with a friend, who is working in the bay area:

- How long will we keep building more efficient AI technology?

- How would we overcome the challenges of humans controlling AI and not the other way around? It is a real threat!

- What is next after silicon? Is silicon good enough to perform approximation calculation? Too futuristic perhaps?